Apache Flink: What Is It and Why Should You Care?

In today’s fast-paced world, businesses need to process and understand data in real-time. Whether it’s tracking website activity, detecting fraud, or processing data from IoT devices, getting insights quickly is key. This is where Apache Flink comes in. It helps companies process big amounts of data as it happens. In this blog, we’ll look at what Apache Flink is, its main features, and how it works with Apache Kafka.

What is Apache Flink?

Apache Flink is an open-source tool used to process data streams in real-time or in batches. This means it can work with data that keeps coming in continuously (like a live feed of information) or with a large set of data all at once (like a batch job).

Flink is a great fit for applications that need to act on data immediately, such as:

- Monitoring websites to see what users are doing.

- Tracking sensor data from IoT devices.

- Detecting fraud in financial transactions.

What makes Flink stand out is that it can handle both real-time streams and batch jobs, giving developers flexibility.

Key Features of Apache Flink

1. Stream and Batch Processing in One Tool

Flink can process both streams of data in real-time and large amounts of data in batches. This means you don’t need different tools for different types of jobs.

- Stream Processing: Imagine getting a constant flow of sensor data from devices. Flink can handle that data as it arrives, in real-time.

- Batch Processing: If you have a big set of data, like a year's worth of sales records, Flink can process that all at once.

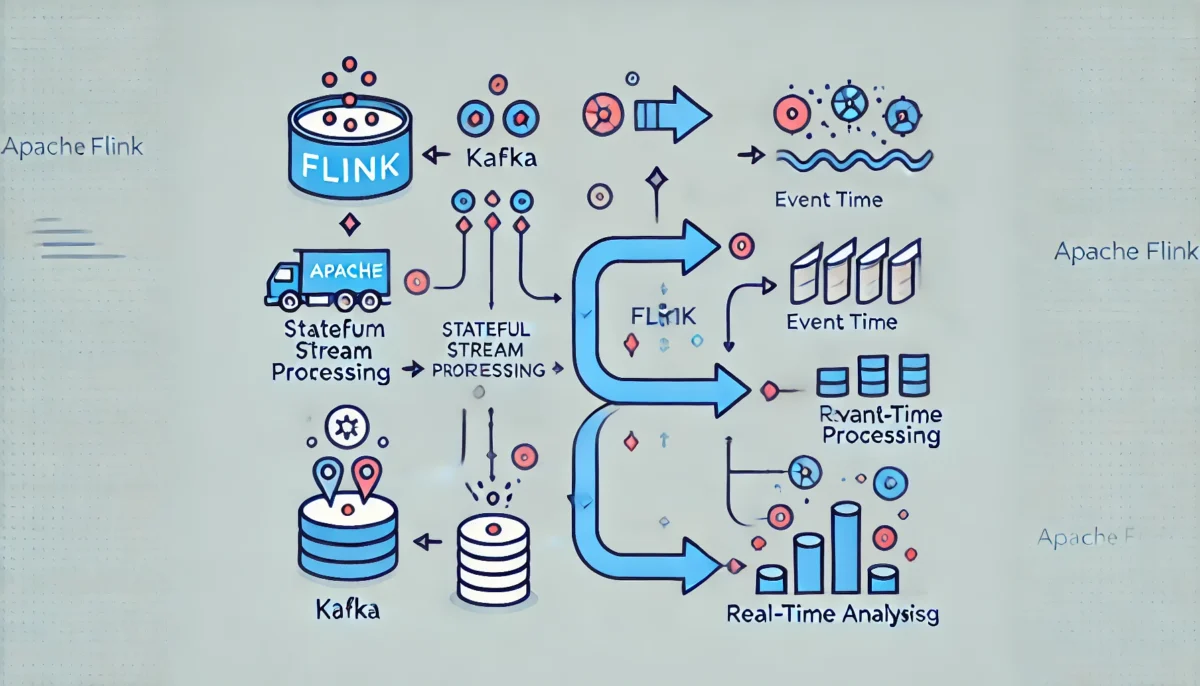

2. Stateful Stream Processing

Flink keeps track of information over time, known as stateful processing. This is useful when you need to remember past events while processing new ones.

For example, if you’re detecting fraud, Flink can track a user’s transaction history and flag any suspicious activity based on their past actions.

3. Event Time Processing

Data doesn’t always arrive in the right order. Sometimes, information can be delayed, like sensor data from a remote location. Flink has a feature called event time processing, which processes data based on when it actually happened, not when it arrived. This makes Flink great at handling late-arriving data.

4. Fault Tolerance and Low Latency

Flink is designed to process data with very low delay (millisecond-level). It also supports fault tolerance, meaning if something goes wrong (like a system failure), Flink can recover and continue from where it left off without losing data. This makes it reliable for critical applications like financial trading or monitoring systems.

How Apache Flink and Apache Kafka Work Together

Apache Kafka is often used with Flink to handle the movement of data. Kafka is a system that stores and transfers data streams between producers (who generate data) and consumers (who process it).

Kafka as the Data Source

Kafka collects data from various sources, like websites, sensors, or logs, and stores it in a way that Flink can easily access. Flink can then read this data from Kafka to process it in real-time.

Flink as the Data Processor

Flink processes the data it receives from Kafka. It might transform, analyze, or enrich this data and then send the results to another system, database, or back to Kafka.

For example, Flink could read customer data from Kafka, process it to generate recommendations, and send those recommendations back to Kafka, which then forwards them to the user’s app.

Kafka as the Data Sink

After processing, Kafka can be used as a place to store or distribute the results. Other applications can then read the processed data from Kafka.

Real-World Use Cases for Apache Flink

1. Real-Time Analytics: Companies use Flink to monitor things like website traffic, customer behaviour, or IoT devices in real-time.

2. Fraud Detection: Banks and payment platforms use Flink to spot suspicious transactions as they happen.

3. Recommendation Systems: Websites and apps use Flink to analyze user behaviour and offer personalised recommendations instantly.

4. Event-Driven Applications: Flink powers applications that need to act on events as they occur, like sending alerts when certain conditions are met.

Conclusion

Apache Flink is a powerful tool for processing real-time data streams. It is highly reliable, flexible, and can handle both live data streams and batch jobs. When combined with Apache Kafka, it becomes part of a robust data pipeline that can handle anything from monitoring sensor data to building real-time analytics dashboards.

If your application needs real-time insights, Apache Flink is a great solution.

Comments (0)

No comments found.